It’s now 45 years since the Intel 8086 CPU was first released, introducing us to the x86 instruction set that’s still the basis of modern PCs today. Amazingly, though, the most important chip in the history of Intel – the chip which began the whole x86 CPU line – was only intended to be a stopgap.

In 1976, there were no personal computers as we know them today, only a range of specialist scientific tools, industrial devices, mainframes and microcomputers, which were slowly trickling down from big corporations and research institutions into the small business market.

At this point, Intel was just one of several major players in the emerging processor market. Its 8-bit 8080 microprocessor had made the jump from calculators and cash registers to computer terminals and microcomputers, and was the core platform for the big OS of the era, CP/M.

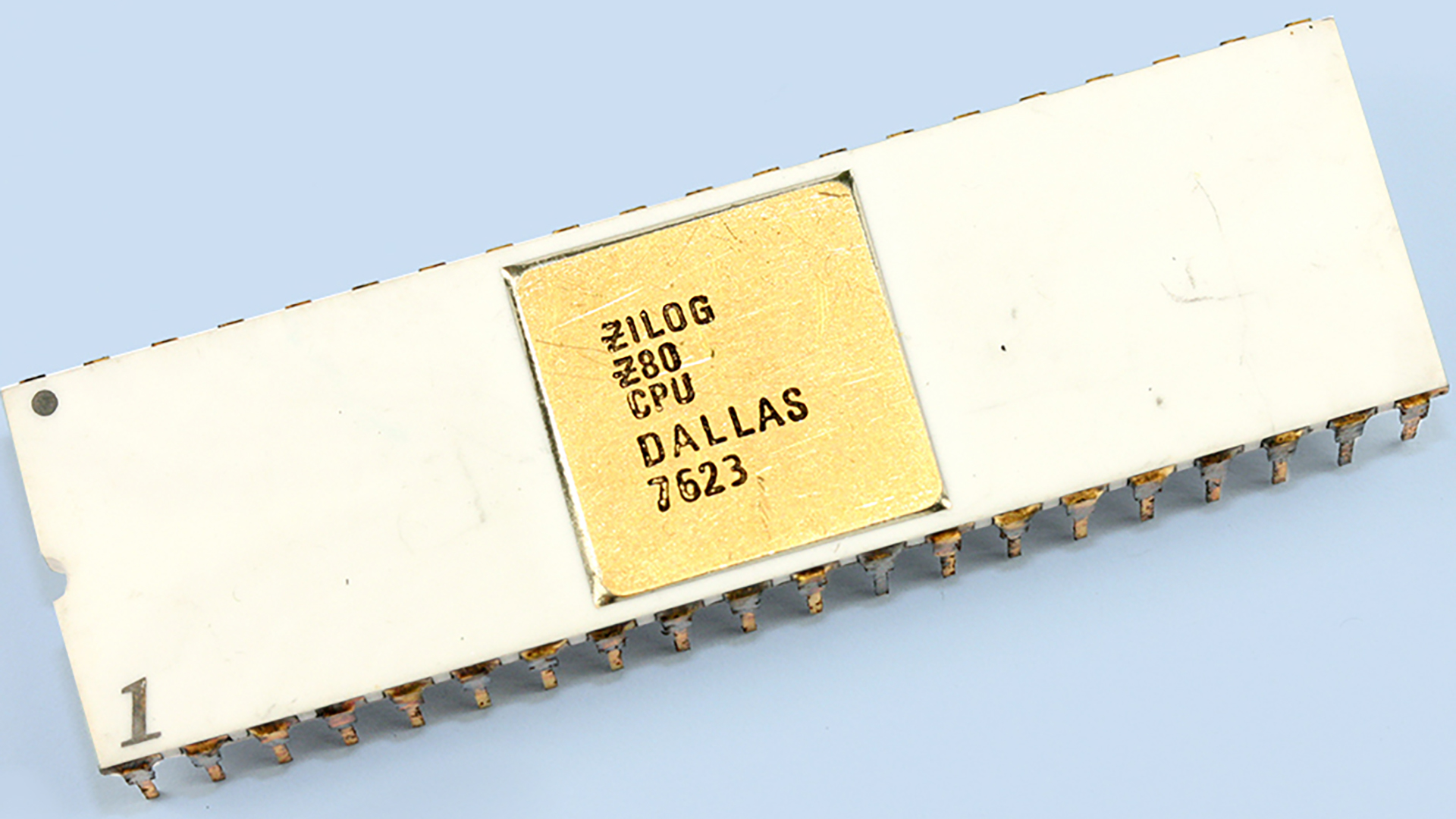

However, Intel had some serious competition. Zilog’s Z80 was effectively an enhanced 8080, running a broadly compatible machine code with some added functions for processing strings – you might remember it from 1980s home computers such as the ZX Spectrum.

The Motorola 6800 had similar capabilities to Intel’s chip, and was beginning to find favor with Altair and other manufacturers of early kit microcomputers. To make matters worse, both Motorola and Zilog were working on new 16-bit designs, the Motorola 68000 and the Zilog Z8000.

Intel was working on its own revolutionary 32-bit architecture, dubbed Intel iAPX 432, but had run into some complex challenges. Development of iAPX 432 was taking Intel’s team of engineers much longer than expected. The architecture spanned across three chips, and the process technology required to manufacture them was still years away.

Zilog was eating into Intel’s market share with its blockbuster Z80 chip – you might remember it from the Sinclair ZX Spectrum!

What’s more, the instruction set was focused on running new high-level, object-oriented programming languages that weren’t yet mainstream; Intel’s own operating system for it was being coded entirely in Ada. Concerned that Zilog and Motorola could eat its market share before iAPX 432 even launched, Intel needed a new and exciting chip to hold its competitors at bay.

Intel had followed up the 8080 with its own enhanced version, the In8085, but this was little more than an 8080 with a couple of extra instructions to reduce its reliance on external support circuitry. Knowing it needed more, Intel looked to Steven Morse, a young software engineer who had just written a critical report on the iAPX 432 processor design, and asked him to design the instruction set for a new Intel processor. It had to be 8080-compatible and able to address at least 128KB of memory – double the maximum 64KB supported by the 8080.

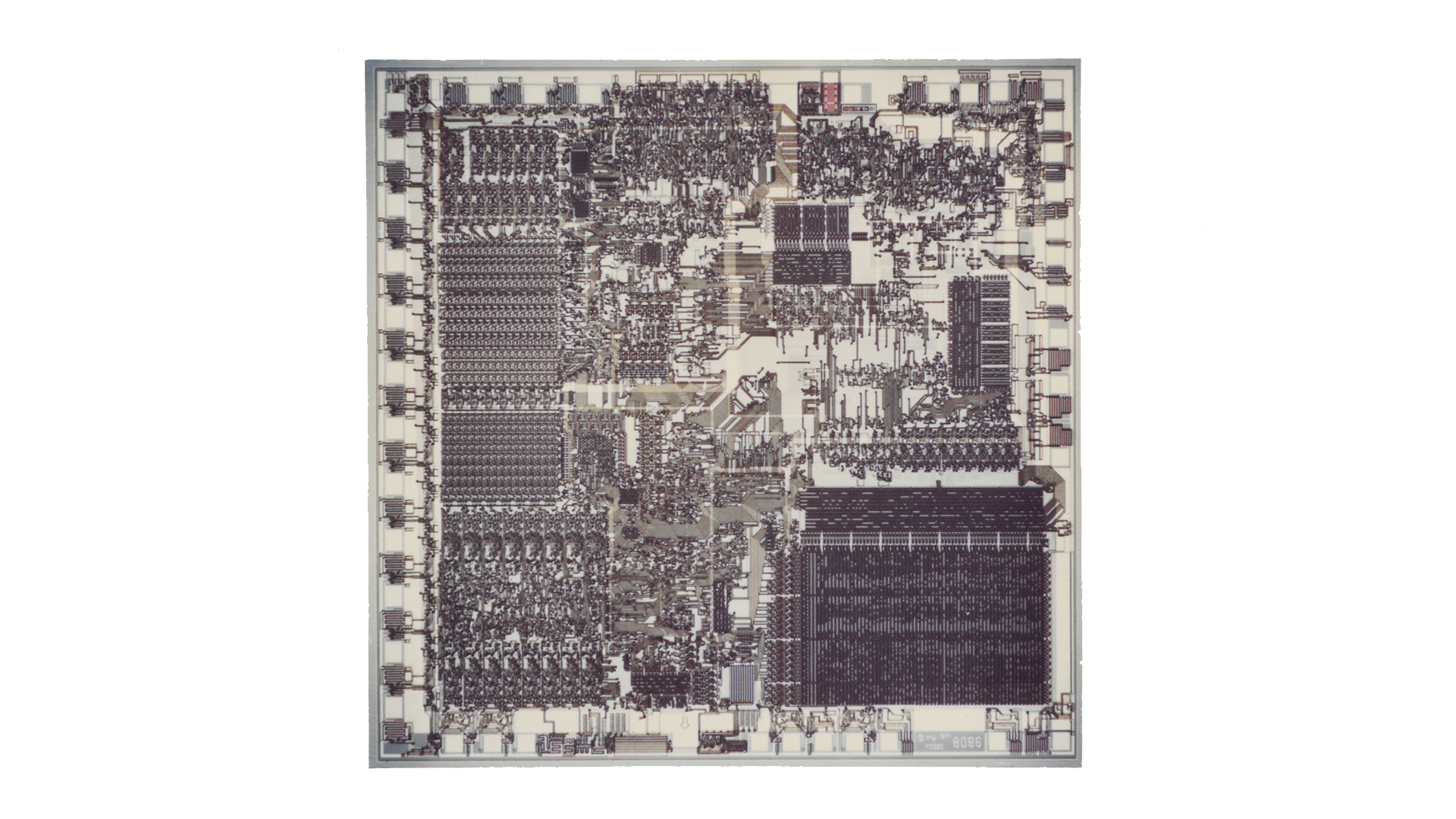

The die of the original 8086. It was one of the most complex processors of the time with over 29,000 NMOS transistors

Designing the Intel 8086

Morse worked with a small team, including project manager Bill Pohlman and logic designer Jim McKevitt, to design and implement the new architecture. He typed specs and documentation through a text editor on a terminal connected to an Intel mainframe, even creating diagrams from ASCII characters to illustrate how the new CPU would work.

Meanwhile, Jim McKevitt worked on how data would be passed between the CPU and the supporting chips and the circuitry across the system bus. After the first couple of revisions, Intel brought in a second software engineer, Bruce Ravenal, to help Morse refine the specs. As the project neared completion, the team grew to develop the hardware design, and were able to simulate and test what would become the core of the x86 instruction set.

Left pretty much alone by the Intel management to work on an architecture Intel saw as having a limited lifespan, Morse and his team had freedom to innovate. The 8086 design had some clear advantages over the old 8080. It couldn’t merely address double the RAM, but a full 1MB. Rather than handle 8-bit operations, it could manage 16-bit.

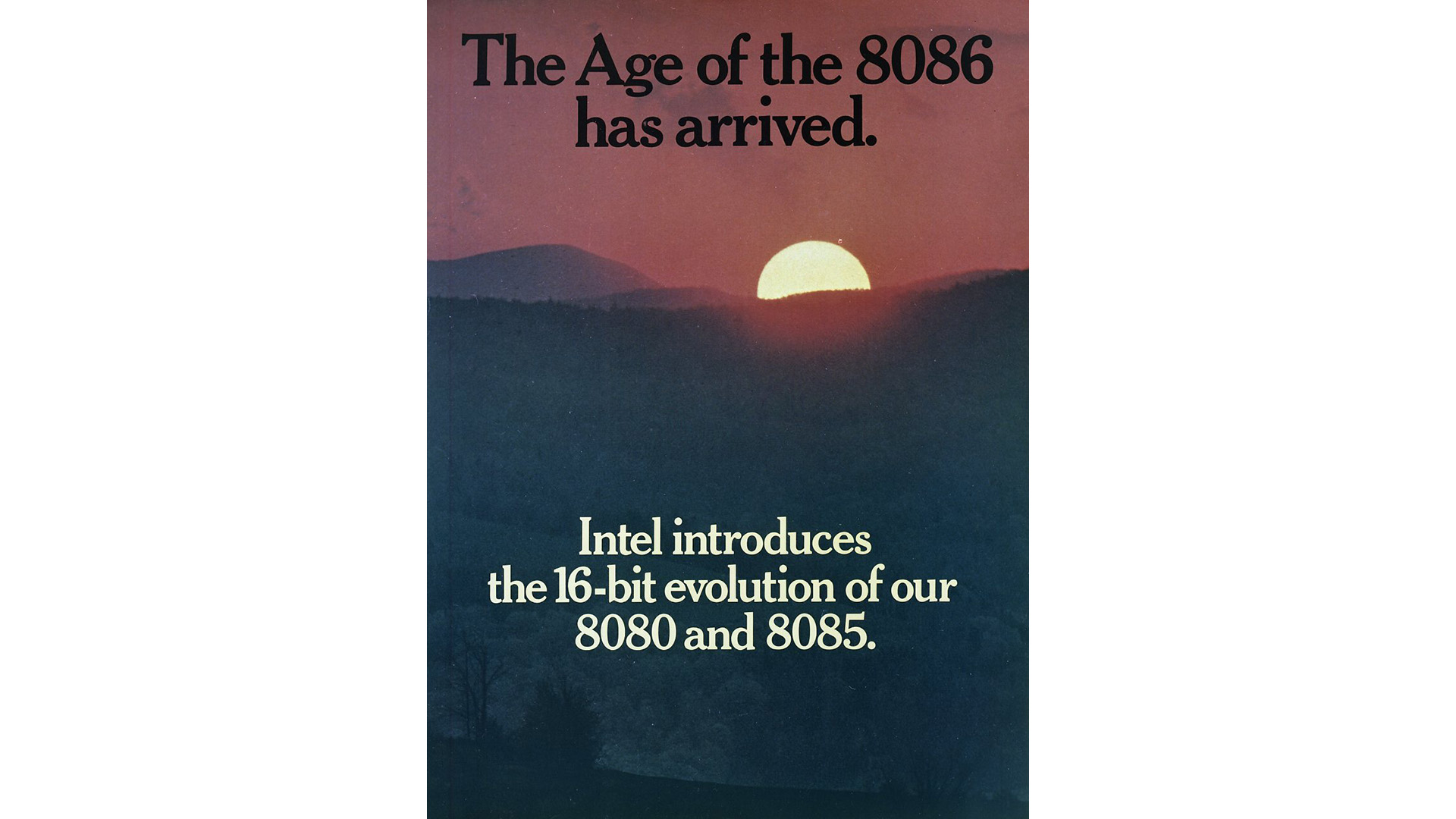

A January 1978 advertisement announces the arrival of the Intel 8086, before it reached market in June 1978

It had a selection of new instructions and features, including features designed to handle strings more efficiently and features designed to support high-level programming languages. It made up for one of the 8080’s biggest shortfalls with hardware support for multiply and divide operations. The 8086 design made a coder’s life easier.

However, Morse also took a new approach to processor design. As he told PC World in a 2008 interview, ‘up until that time, hardware people did all the architectural design, and they would put in whatever features they had space for on the chip. It didn’t matter whether the feature was useful or not’.

Instead, Morse looked at what features could be added – and how they could be designed – in order to make software run more efficiently. The 8086 made existing 8080 software run better, and was designed to work more effectively with the software and programming languages that were emerging, unlike both iAPX 432 and Zilog’s Z8000.

What’s more, the 8086 was designed to work with additional processors, co-processors and other components as the heart of a computer system. In the foreword to his book, The 8086 Primer, Morse talks of how ‘the thrust of the 8086 has always been to help users get their products to market faster using compatible software, peripheral components and system support’.

It was designed as the heart of ‘a microprocessing family’ with multiple processors supporting the CPU. This, in turn, made the 8086 a good fit as microcomputers evolved into PCs.

The 8088 made it big as the CPU in the new IBM 5150, or IBM PC as it came to be known, putting the scalable x86 architecture on the map

A star is born

The 8086 was hardly an overnight success. The Z80 continued to cause Intel nightmares, appearing in more affordable business computers running CP/M. The most exciting processor in development in the late 1970s wasn’t one of Intel’s, but Motorola’s 68000, which went on to dominate the workstation market, and create a new generation of home and business computers.

Comparatively, the 8086 made it into some new microcomputers and terminals, plus a few more specialist devices, but it wasn’t the market leader. In March 1979, frustrated by Intel’s working culture, Steven Morse left the company.

Then, a few weeks after Morse left, Intel launched a revamp of the 8086: the 8088. The new chip was actually weaker; half its data pins were removed, so it could only send or receive 8 bits of data at a time. However, this meant it could work with existing 8-bit support chips designed for the 8080, and it didn’t need as much complex circuitry to run.

Given that the 8086 architecture was backward compatible, and that Intel was investing heavily in support, building new versions of existing computers and devices around the 8088 became a no-brainer. At this point, Intel found out about a new project underway at IBM.

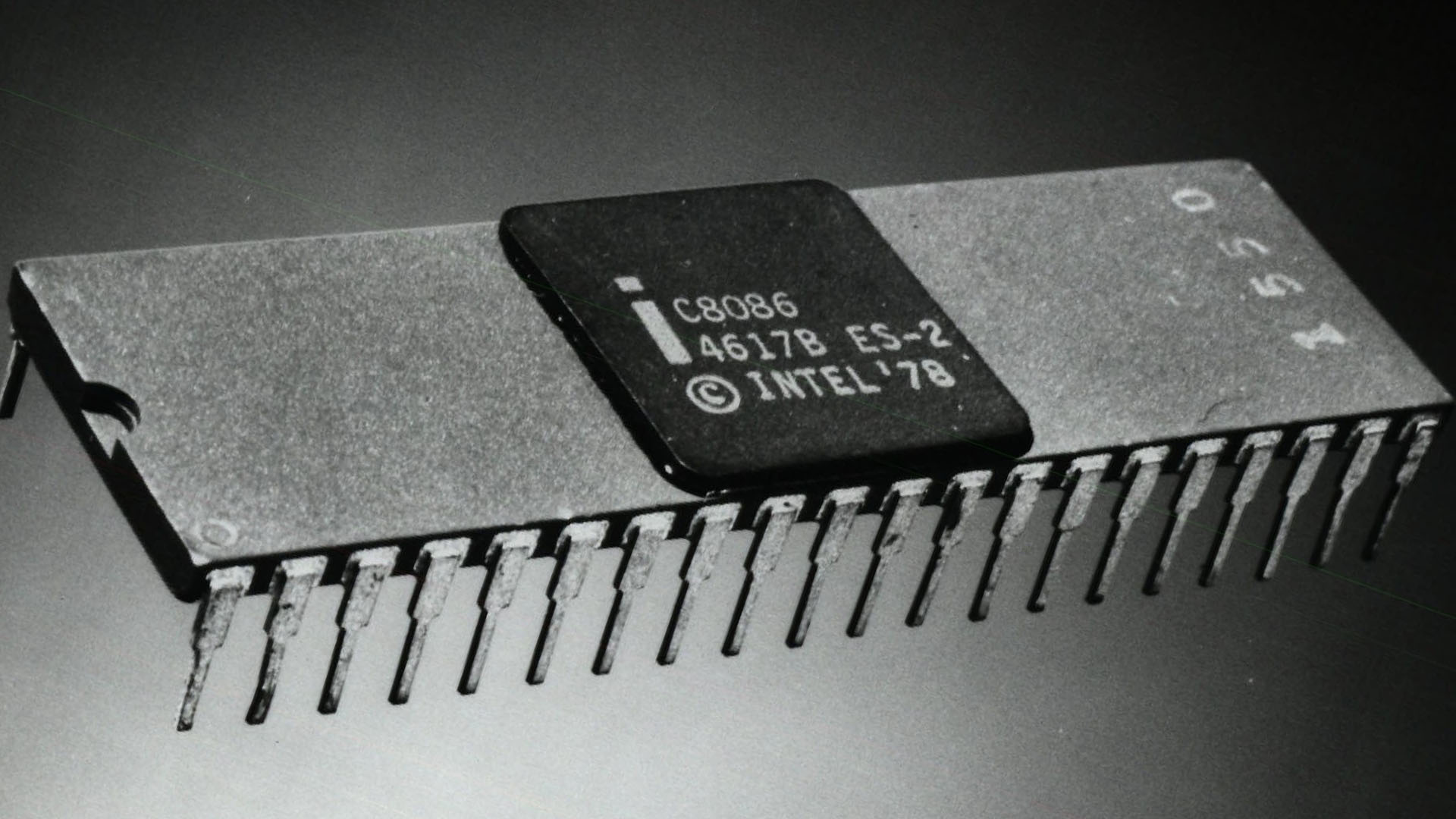

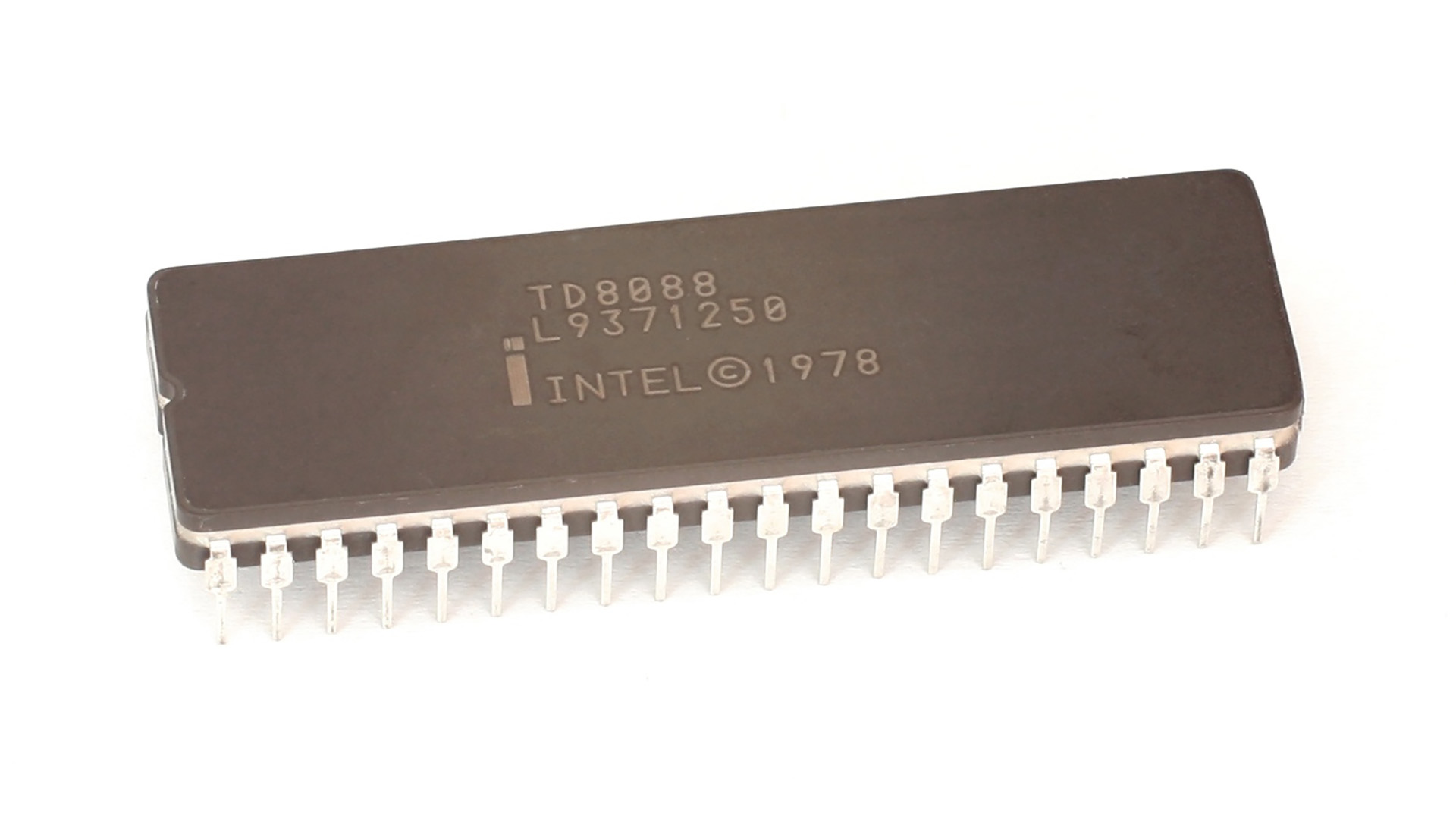

It didn’t look anything special, but the 8086 CPU ended up founding a mighty dynasty of CPUs

In 1980, an Intel field sales engineer, Earl Whetstone, began talking to an IBM engineer, Donald Estridge, about a top-secret project to develop a new kind of computer. Schedules were tight, meaning IBM needed to work with an existing processor, and Estridge faced a choice between the Zilog Z8000, the Motorola 68000, and Intel’s 8086 and 8088.

Whetstone sold Estridge on the technical merits of the 8086 architecture and the lengths Intel would go to support it, while IBM’s bean counters realized that the 8800 could help them keep costs down. IBM was already familiar with Intel, and was working with a version of Microsoft Basic that ran on the 8080.

A version of 86-DOS, sold as part of a Seattle Computer Products kit, would become the basis of Microsoft’s MS-DOS. The project became the IBM PC 5150, or the original IBM PC, and because it used off-the-shelf hardware and Microsoft’s software, it became the inspiration for an army of PC clones.

The Intel 8088 was a cut-down 8086, but its broad compatibility with existing coprocessors and hardware made it idealfor the IBM PC. Photo from CPU Collection of Konstantin Lanzet, Wikimedia, CC BY-SA 3.0

As they say, the rest is history. Had Intel focused down on iAX 432 and IBM had chosen Motorola, computing may have gone in a different direction. Intel was smart enough to double down on x86, moving on to the 286 and 386 CPUs and – eventually – abandoning what was supposed to be its flagship line.

What’s more, while the x86 architecture has now evolved beyond all recognition, even today’s mightiest Core CPUs can still run code written for the lowly 8086. It started as a stopgap, but the 8086 has stood the test of time.

Earl Whetstone, Intel Field Engineer, conquers the PC chip market

If you’re looking to upgrade to one of the latest CPUs based on the x86 architecture introduced by the 8086, make sure you read our full guide to the best gaming CPU, which covers the best options at a range of prices. One of our current favorites from the firm that brought us the first x86 architecture is the Intel Core i5-13600K, which offers great value and you can read our full guide on how to overclock the Core i5-13600K as well.

We hope you’ve enjoyed this retrospective about the first 16-bit Intel CPU. For more articles about the PC’s vintage history, check out our Retro tech page. Oh, and yes, we really put an 8086 (and AMD one) in a cupcake to make the main photo for this story.