It’s rare we get a new type of memory, and Samsung recently surprised us with its new GDDR6W tech. The ‘W’ means it’s ‘wide’ and can send twice the data per cycle of standard GDDR6 memory, doubling the bandwidth per chip. Achieving a data bus that is both wide and fast (22Gbps) are two performance factors that are typically mutually exclusive, so this is a significant breakthrough.

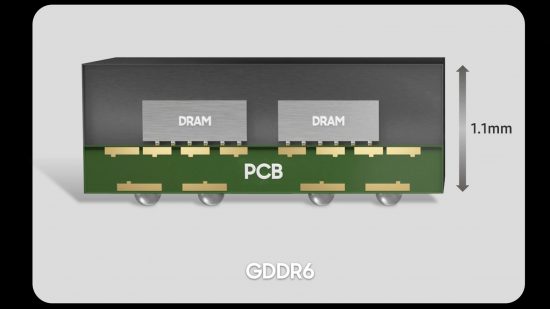

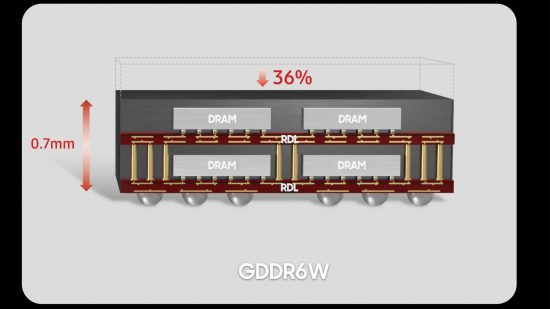

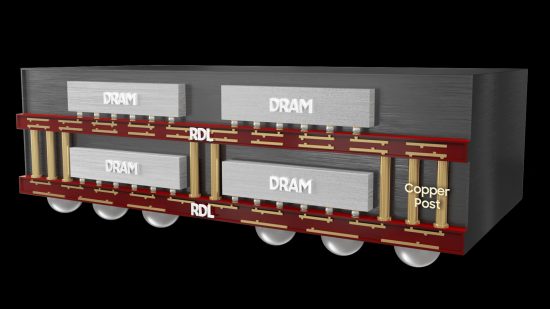

GDDR6W uses chip-stacking within each package (fan-out wafer-level packaging), in order to put one layer of memory ICs on top of another and connect them with tiny copper pillars. This also doubles the capacity within each chip. It means a high-end GPU with 16GB of memory and a 256-bit interface could need only four GDDR6W chips, rather than the eight normally required, simplifying the PCB design. It’s ideal for gaming laptops, but useful on desktop cards too.

What I see, though, is that this technology is a stepping stone towards future CPU designs. Increasing DDR5 speeds is becoming too costly, and the DIMMs themselves are reaching the limit of the physical, plug-in design.

DDR5 speeds have soared since launched, with premium (expensive) motherboards and CPUs that won the silicon lottery even hitting effective frequencies of 8000MHz. These new bandwidth highs are great because CPU core counts have continued to grow unabated, and all of them need to talk to memory.

However, memory performance isn’t just governed by speed, but also latency. DDR5 has the former in spades, but it’s still not achieving lower latencies than a decent quality kit of much cheaper DDR4 memory – this matters not only in gaming benchmarks, but also in every day use.

Reducing DDR5 system cost is difficult because doing so required higher-quality PCBs and more advanced chips. Also, each DIMM needs its own power regulation, while DDR5 motherboards from both AMD and Intel also remain expensive. DDR5 is still in its early days right now, but inevitably we can expect the demands on memory bandwidth to keep increasing.

So what needs to change about future memory design to make it more affordable to everyone? Well, I foresee Samsung’s ‘W’ technology being used in lieu of plug-in DIMMs, where several DDR ‘W’ chips are attached directly to the CPU substrate. You would buy a CPU and choose 32GB or 64GB of memory as one item, just like you would spec a smartphone.

The memory would just come in the form of more chiplets added to the normal CPU packaging, a process that would have a lower cost compared to the expensive silicon interposer required for HBM memory. Bringing the memory as close as possible to the CPU silicon would enable Intel and AMD to achieve much faster speeds and potentially lower latency, without needing costly motherboard and DIMM designs.

You can imagine, for example, a mainstream 6/8-core CPU coming with dual-channel memory, while a 12/16-core CPU features tri-channel and a 24/32-core chip has quad-channel memory. This keeps a similar memory bandwidth per core throughout the whole stack, all without needing a new motherboard.

There are obviously downsides. Choosing memory is a core part of the PC building experience and being able to reuse it in different builds is a big benefit. That said, the old ‘two spare DIMM slots to upgrade later’ setup is already dead. DDR5’s design has effectively killed four-DIMM builds because the achievable speed and reliability drops significantly if you’re using four high-speed modules.

AMD and Intel wouldn’t need a JEDEC stamp for this system either, just a collaboration with memory makers – you could well be looking at different PC layouts in the future.

Do you feel your system is being held back by memory performance? Let us know your experience via the Custom PC Facebook page or Custom PC Twitter. Meanwhile, check out this link if you’re looking for the best DDR5 memory available now.